Blog Post

https://blurbusters.com/frame-rate-amplification-technologies-frat-more-frame-rate-with-better-graphics/

Talks about DLSS, Oculus, and some great work by Cambridge researchers, "Temporal Resolution Multiplexing: Exploiting the limitations of spatio-temporal vision for more efficient VR rendering" Gyorgy Denes∗ et al.

General information from companies that work in this field.

https://porch.com/advice/vr-metaverse-experts-advice

In addition, apple patents related to (perhaps) AR/VR hardware in the pipeline, including a 3000 ppi device, a virtual paper that is interactive, etc

https://www.patentlyapple.com/augmented-reality/

Nvidia is sponsoring an "Extend the Omniverse" contest, ending Aug 19 2022. Participants need to build an extension using Omniverse Kit.

Join at https://www.nvidia.com/en-us/geforce/contests/omniverse-developer-contest-terms-conditions/

Blog Post

libtorch provides C++ API for the Torch framework.

The below libraries are required for the linking step, for any C++ program using libtorch (with GPU acceleration)

"C:\Users\aaa\Downloads\opencv\build\x64\vc15\lib\opencv_world454.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\caffe2_nvrtc.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\c10.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\c10_cuda.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\torch.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\torch_cpu.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\torch_cuda.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\torch_cuda_cpp.lib"

"C:\Users\aaa\Downloads\libtorch-win-shared-with-deps-1.8.2+cu111\libtorch\lib\torch_cuda_cu.lib"

"C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3\lib\x64\cublas.lib"

"C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3\lib\x64\cudart.lib"

"C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3\lib\x64\cudnn.lib"

"C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3\lib\x64\cufft.lib"

"C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3\lib\x64\curand.lib"

"C:\Program Files\NVIDIA Corporation\NvToolsExt\lib\x64\nvToolsExt64_1.lib"

And, the corresponding DLLs from the libtorch package have to be in the current folder or available in PATH

The libtorch LTS 1.8 package itself can be downloaded from

https://download.pytorch.org/libtorch/lts/1.8/cu111/libtorch-win-shared-with-deps-1.8.2%2Bcu111.zip

Blog Post

FFMPEG commands for creating SBS videos, scaling, overlays, streaming and CUDA/OpenGL interop code.

https://gist.github.com/prabindh/c8048ac0f4cd6d48e9b682523e5b3c1f

Blog Post

Blog Post

A simple web browser-based mechanism to identify the sm version of GPU used on a desktop. This information is required for compiling .cu kernels.

https://gpupowered.org/mygpu/

Blog Post

User "Bhaal_spawn" has created a fan page for voodoo, a first of its kind 3D accelerator, using LEGO bricks.

https://ideas.lego.com/projects/480e824e-d651-4192-996a-937eb7b4fe98

TPOT Blog Post

TPOT is a partial Automated Machine Learning toolkit, that can "discover" pipelines given a data-set, including optimal feature engineering, and the pipeline itself. A detailed comparison with manual tuning is necessary here.

|

The following code illustrates how TPOT can be employed for performing a simple classification task over the Iris dataset.

from tpot import TPOTClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

import numpy as np

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(iris.data.astype(np.float64),

iris.target.astype(np.float64), train_size=0.75, test_size=0.25, random_state=42)

tpot = TPOTClassifier(generations=5, population_size=50, verbosity=2, random_state=42)

tpot.fit(X_train, y_train)

print(tpot.score(X_test, y_test))

tpot.export('tpot_iris_pipeline.py')

Running this code should discover a pipeline (exported as tpot_iris_pipeline.py)

|

CUDA Blog Post

When moving from versions below 2.3.0 to Tensorflow 2.3.0 (rc0/rc2/release) - the below error might be faced.

Non-OK-status: GpuLaunchKernelstatus: Internal: no kernel image is available for execution on the device

|

.This is because, TF team took the decision to support only compute capability 7.0, to reduce binary sizes of distribution.

This is outlined in the GPU section of the release notes at,

https://github.com/tensorflow/tensorflow/releases/tag/v2.3.0 - TF 2.3 includes PTX kernels only for compute capability 7.0 to reduce the TF pip binary size. Earlier releases included PTX for a variety of older compute capabilities.

GRAPHICS Blog Post

gl-transitions.com provides great special effects for transitions from one surface to another using glsl (ES) shaders. This is targeted for WebGL applications, but thinking about it, why not in native (C++) applications ?

Wrote up this post about how to integrate these shaders directly into native code, using nengl, a wrapper for OpenGLES2 applications. This is using OpenGL ES with EGL context on windows desktops via glfw3 and libANGLE.

Check out the code in github for a Windows application using libANGLE at,

https://github.com/prabindh/nengl

And a more detailed post at,

https://medium.com/@prabindh/using-gl-transitions-for-effects-9e73abfc8fd5

Note - this can be used as is on Linux and other platforms that support OpenGLES2 or OpenGLES3.

CUDA Blog Post

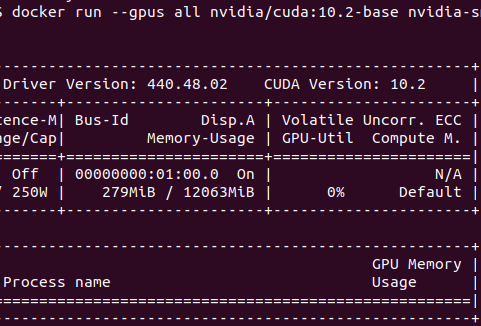

Windows Subsystem on Linux (WSL2) provides a way to use Linux functionality in Windows itself, by running a Linux Kernel in Windows.

This month, Nvidia and Microsoft announced availability of CUDA API in WSL2, as part of the Insider Preview. This enables CUDA based applications to run in Linux on WSL2, on Windows.

Note: These are command line applications.

More info at,

https://docs.microsoft.com/en-us/windows/win32/direct3d12/gpu-cuda-in-wsl

https://ubuntu.com/blog/getting-started-with-cuda-on-ubuntu-on-wsl-2

PARABRICKS Blog Post

If you are using Docker version 19.03.5 and nvidia-docker, the installer.py is not setup to check the GPU installation correctly. This can result in errors below and a failed installation, even if docker works correctly with GPU in other applications/container use-cases.

"docker does not have nvidia runtime. Please add nvidia runtime to docker or install nvidia-docker. Exiting..."

Installer.py requires the changes below for successful installation.

https://github.com/prabindh/parabricks-changes/commit/b39f61b8512240bd8c3e7a903f09326fb029893f

Or the complete file below.

https://github.com/prabindh/parabricks-changes/blob/master/installer.py

Further steps in the germline pipeline work as per documentation,

Steps in the Pipeline:

Alignment of Reads with Reference

Coordinate Sorting

Marking Duplicate BAM Entries

Base Quality Score Calibration of the Sample

Apply BQSR for the Sample

Germline Variant Calling

Read more at,

https://www.parabricks.com/germline/

Blog Post

Analysis of Genomic data with Parabricks

NVIDIA PARABRICKS

Analyzing genomic data is computationally intensive. Time and cost are significant barriers to using genomics data for precision medicine.

The NVIDIA Parabricks Genomics Analysis Toolkit breaks down those barriers, providing GPU-accelerated genomic analysis. Data that once took days to analyze can now be done in under an hour. Choose to run specific accelerated tools or full commonly used pipelines with outputs specific to your requirements.

https://www.developer.nvidia.com/nvidia-parabricks

![]()

![]()